Overview

As Microsoft Ignite rolls through Seattle and the online community, the sheer number of announcements will take some time to fully digest. Just skim through the customary Book of News and you will start to see the scale.

For this week, here are my three top picks from a Hybrid Platforms perspective. Tim Russell will be looking at his top picks from a Modern Workspace angle too, and you can read those here.

Azure Stack 23H2

The Edge paradigm

Edge is the new cool topic that is not getting its deserved time in the limelight, mainly because Generative AI is stealing it all! But if we think about it, we will realise that edge will be a mandatory component of every cloud strategy. Data is generated at the Far Edge (sensors) and will, in many environments, need to be processed on near-edge compute platforms. Ultimately, data will be cleaned and shipped to a central location for larger, analytics-driven outcomes. Also, we need to consider that AI inferencing will happen at the edge for many workloads.

For organisations that are on a Microsoft Azure journey, deploying modern applications and services with native Azure functions, this edge element can pose a technical challenge. As the internal IT functions pivot to a Microsoft toolset, efficiency and consistency could be compromised as Edge deployments increase on non-Azure technology. Luckily, Microsoft are pushing hard with the developments around Azure ARC and Azure Stack HCI to ensure that a single consistent management experience can be achieved, regardless of the execution location.

With Azure Stack HCI version 23H2, operations like deployment, patching, configuration, and monitoring can now be conducted from the cloud, using the common Azure toolset and portals. Making Azure Stack HCI easier to deploy and operate at scale is a key goal. When new hardware arrives at an edge location, if the OS is pre-installed, local teams can establish the initial network connection to Azure Arc with deployment continuing from the cloud.

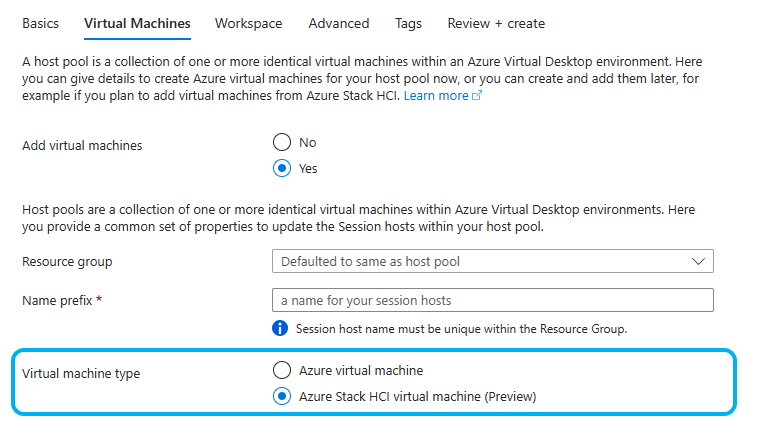

Azure Virtual Desktops (AVD) and the future of Office on Server OS

Azure Stack HCI version 23H2 continues the journey to getting AVD running in on-premises environments. A long-running preview, which has had some challenges over the last 12 months, has taken some positive steps forward. The addition of unified host pool provisioning shows the commitment to make Stack HCI a fully compliant part of AVD.

Running a virtual desktop close to the backend application has always been the optimal design decision; you don’t want that pesky WAN latency between the application and the backend database. Traditional and legacy applications will continue to be a challenge for years to come, and for many organisations a migration to AVD (which has many benefits) would have meant moving all those applications to Azure as well. Not something that's always economical or supportable.

With Microsoft bringing AVD to Azure stack HCI we have a viable solution to run VDI and hosted applications on-premises (next to the backend servers) whilst taking full advantage of the AVD management plane and features like multi-session Windows11 (and hopefully W365, although not confirmed).

One of the major inflection points that will drive the adoption of the above technology is the impending EoL of Microsoft Office running on Windows Server Operating Systems. In October 2026 (which is not that far away when we are talking larger-scale VDI migrations) all those RDSH, Horizon and Citrix farms, which are leveraging the multi-session capabilities of Server OS, will cease to be viable for any user that requires access to an Office application!

Moving those farms to Azure-based AVD or moving to full VDI (not shared OS) are the only possible options today. Each brings its challenges, either in the VDI>App adjacency we mentioned above, or cost. Moving to AVD in the cloud or to a single session on-premises architecture will present a significant change in economics; hardware alone could be 2x or 3x the cost. AVD running on Stack HCI should provide a third viable option, which can balance both challenges.

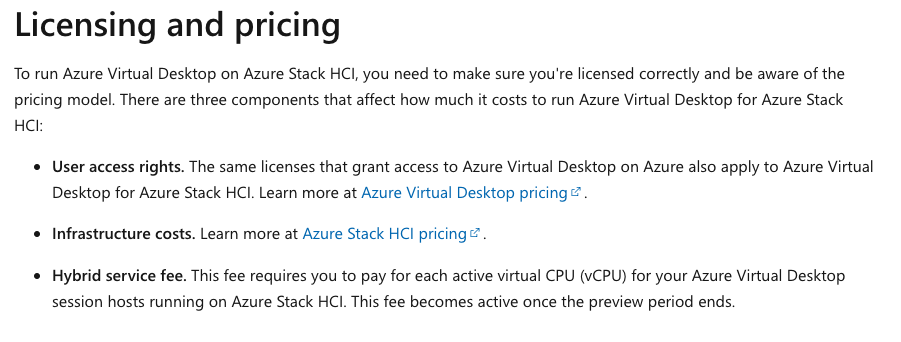

The big elephant in the room is the price point for doing this; something we are all still pushing Microsoft to answer. Currently the statement on the Hybrid Service fee reads:

Microsoft as a CHIP Maker?

Gone are the days of Intel-only platforms. Intel is still the leader with around 60% of the market, but we’re seeing other partners now enter the space. Driven by the exponential demand for AI, it is becoming clear that the traditional Intel/Nvidia combination will likely not be able to meet demands. We have Intel adding new AI functions to the latest Gen4 CPU whilst also releasing its own GPU, AMD CPUs have a major part to play in current AI workloads and will be adding GPU in 2024 as well. Now we have Microsoft joining the game with Azure Maia and Cobalt, aiming to tailor everything from silicon to service to meet future AI demands within Azure.

This is the opening statement from Microsoft:

"Today at Microsoft Ignite the company unveiled two custom-designed chips and integrated systems that resulted from that journey: the Microsoft Azure Maia AI Accelerator, optimized for artificial intelligence (AI) tasks and generative AI, and the Microsoft Azure Cobalt CPU, an Arm-based processor tailored to run general purpose compute workloads on the Microsoft Cloud."

It is anticipated that we will start to see this new silicon appear in the early part of 2024, underpinning services like Co-pilot and OpenAI. Plans for partnerships are vague but we can expect to see services like AzureSQL take advantage and I am sure the integrated nature of the tech will allow for many innovations. If nothing else, it will force all the other players in this space to continue driving forward

More detailed info can be found here.

Windows AI Studio - For AI Model Configuration

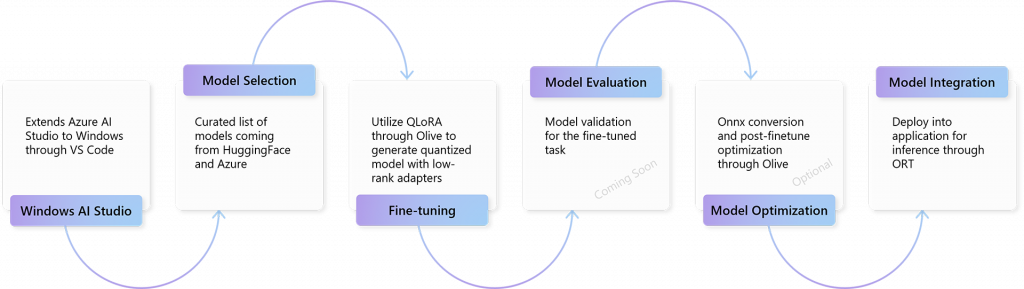

AI is, of course, the big conversation everywhere at the moment, but sometimes it can seem out of reach for many; requiring large-scale compute/GPU farms or budget for expensive cloud platforms. At Ignite we can see Microsoft trying to bring the barrier to entry down with its Windows AI studio announcements.

With the latest Windows 11 update a host of developer features were added as core components of the Windows OS, with the intent to make every developer more productive on Windows. Windows AI Studio was announced as part of this evolution, helping enterprises accelerate local AI development.

Bringing a combination of development tools, models from Azure AI Studio and the likes of Hugging Face to allow the fine-tuning, customisation, and deployment for small language models (SLM).

Microsoft writes:

"This includes an e2e guided workspace setup that includes model configuration UI and guided walkthroughs to fine-tune popular SLMs like Phi. Developers can then rapidly test their fine-tuned model using the Prompt Flow and Gradio templates integrated into the workspace"

This graphic from Microsoft really brings the concept to life:

Alongside this, Nvidia announced that TensorRT-LLM, its Python API for defining Large Language Models (LLM), would be made available on PCs powered by GeForce RTX 30 and 40 Series GPUs (8GB of RAM or more).

The combination of AI Studio and Nvidia should make AI workflows more accessible, not just for SML but also for LLM and the integration into business outcomes.

Full details can be found here.

Summary

Which cloud- or application-focused announcements did you take away from Ignite? With so many to choose from I am sure everyone will have something to test and understand. Personally, I am excited about the integration of the Hybrid model under a single management plane (Azure) and its ability to allow workloads to run in the most optimal location.

-1.png?width=1200&height=330&name=MicrosoftTeams-image%20(34)-1.png)