If you have not read the first part of the OCTO Intro series, take a look here, it will give you the context on the OCTO team and our objectives.

What is a Hybrid Platform? That is the question we are going to answer in this article, understanding the components that it is comprised of and what functions it provides within an organisation. We will also touch on some of the trends and demands we are seeing in each of the focus areas.

At its core a Hybrid Platform will provide the execution layer for your business applications, the network fabric to connect everything, and the operational tooling to ensure your IT team can deliver a quality level of service. Let us dig into this all in more detail.

Contents

- What is a Hybrid Platform

- Hybrid Observability

- Optimised Operations

- Private Cloud

- Public Cloud

- Cyber Resilience

- Edge to Cloud Networking

What is a Hybrid Platform?

Every organisation is operated with a selection of applications and various repositories of data. The types of applications (legacy, traditional, web or modern) will vary, along with the performance and location requirements of each. The types of data stores will range from structured databases to unstructured files and the data lakes that bring it all together. Regardless of the state of modernisation any given organisation is in, a core set of functions will be required to support this range of requirements, this is what defines the ten focus areas of the CDW Hybrid Platforms growth pillar, as shown below:

The focus areas are underpinned by a multitude of technology solutions that drive outcomes to support the execution, connection, protection, and operations of an ICT function's core infrastructure. Make sure you don’t miss the third part of this series; we will talk about the people that make this technology come to life and how it integrates with our other three growth pillars.

While these technology areas support the application and data requirements of today, we need to ensure that they evolve and support the future requirements of your organisation. This is what I call the 'platform of the future' and it needs to conform to five key characteristics to ensure it can deliver these future requirements:

- Be cyber resilient.

- Enables both the ability to withstand and recover from cyber-attacks, providing inherent protection throughout the stack.

- Is hyperconnected.

- Everything should be connected, programable, and form an ecosystem from edge to core to cloud, enabling the connected enterprise to make data-driven decisions.

- Empowers simple operations.

- Ensures the most effective use of limited talent pools, driving efficiency, automation, and observability to ensure excellent customer experiences.

- Underpins Sustainable Outcomes.

- Drives optimal resource consumption with just-in-time provisioning based on the most sustainable equipment and facilities.

- Omni application capable.

- Support for all application types, from legacy and traditional to modern web and container-based architectures.

I hope the list above is quite self-explanatory to save long descriptions, otherwise, this could become a very long read! I will likely write more on these in future articles. By having a set of requirement characteristics we can validate technology choices and ensure they are future-ready investments.

The ten technology focus areas compress into six key functions that are needed to support the platform of the future:

- Hybrid Observability

- Optimised Operations

- Private Cloud

- Public Cloud

- Cyber Resilience

- Edge to Cloud Networking

These six functions allow us and you to focus on building technologies that underpin a key outcome in the overall platform architecture. Let's dig into each in a little detail (again, another set of topics for a much longer discussion). None of the options presented below should be taken as a singular silver bullet - your applications, business objectives, data sets and operating markets should guide decisions and architecture, something our Integrated Technology Solutions (ITS) team of over 650 coworkers loves to execute.

Hybrid Observability

Two things will drive the modern digital enterprise: excellent customer experience and data to driven decision-making. If we consider customers both your employees and your patrons that consume your services, delivering exceptional levels of service is crucial. Talent is in short supply, driving up internal costs and placing transformation projects at risk. Whilst customer loyalty is fickler than ever in the digital world meaning retaining repeat transactions is critical.

With such a reliance on digital platforms and the need for data to drive informed decisions, it is becoming imperative that organisations move beyond simple monitoring of the infrastructure and adopt end-to-end observability, providing a unified view from the coworker edge, through the core and out to the customers consuming services. The ability to uncover the unknown-unknowns will be key!

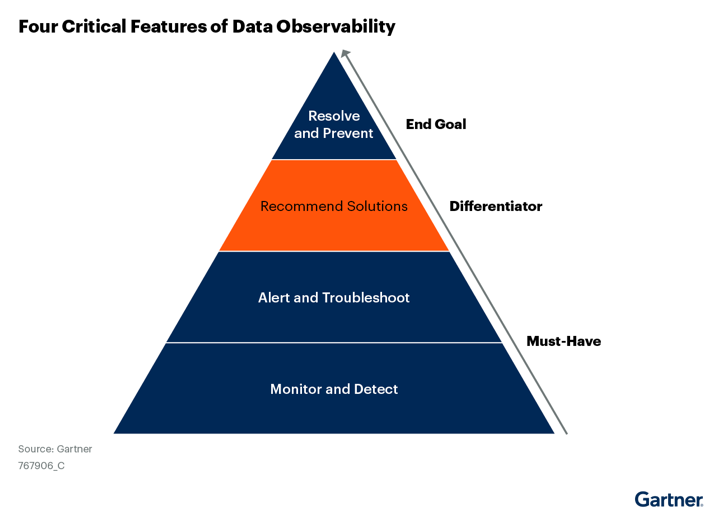

Gartner says "By 2026, 30% of enterprises implementing distributed data architectures will have adopted data observability techniques to improve visibility over the state of the data landscape, up from less than 5% in 2023" and provide four critical features that organisations need to consider. (Data Observability Enables Proactive Data Quality)

Four Critical features of data observability¹

- Ensure your workspace team’s Digital Experience Monitoring (DEM) toolset can integrate with your overall observability goals.

- Consider that true observability should be able to react to the 'unknown-unknowns', not just the 90% threshold alerts!

- Adopt and trust the latest generation of AIOps platforms to unburden your internal teams.

Optimised Operations

One advantage of implementing a Hybrid Observability practice is it provides the foundational data that is often the missing link in most IT Service Management (ITSM) practices. Linking the observability data with the CMDB ensures a critical element is accurate, solving the IT asset Management (ITAM) challenge. It is still a little scary the number of customers I speak to that have significant gaps in the CMDB data they leverage to conduct operations.

Automation adoption is growing and, in many cases, driven as a part of a cloud operating model or cloud centre of excellence being created. Until we can bring all the members of each ICT team on the automation journey, we will fail to turn the corner on service quality and overburdened IT teams. The tools and practices exist, but I think we need to overcome the fear that automation will mean the end of our jobs! Of course, we need to avoid automation for automation's sake and make smart decisions. That said if you need to do a full stack rebuild at some point having all elements automated will be a lifesaver. Add those onetime tasks to the list for future automation when time allows!

Workload Optimisation is another area of the overall operations that is generally still lacking. More prevalent in traditional on-premises enterprise IT architectures than in cloud models (the direct cost are easier to see in the cloud). Optimisation of VM workloads, data storage, and application code can all have significant impacts on operating budgets, reducing power, storage, and compute costs as well as operational time. The impact on wider ESG initiatives should also not be underestimated.

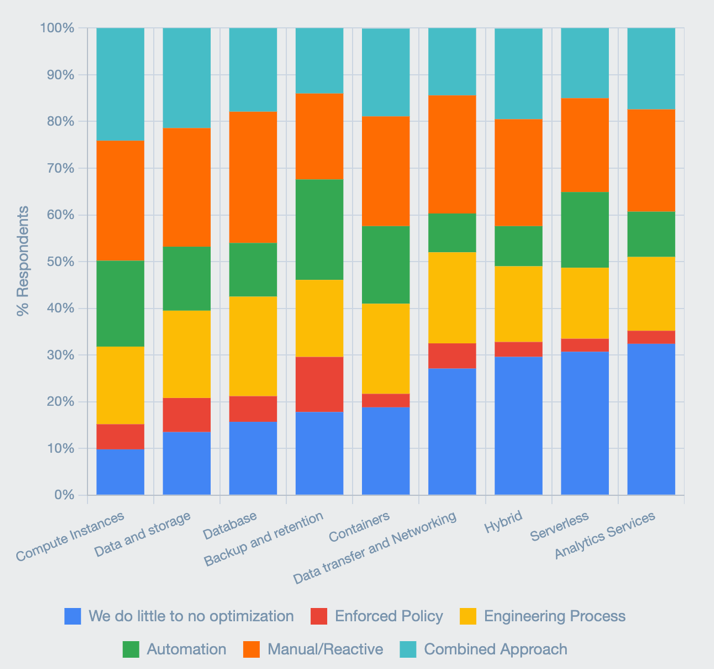

A final thought for operations is the level of FinOps maturity. While it continues to grow many organisations are still mainly in the crawl or walk phases (as defined by the FinOps Foundation). The latest report from the FinOps Foundation highlights many trends, with optimisation showing significantly low levels of automation. The report states, "Optimisation remains a struggle for many as the combined reporting of "We do little or no automation" and "manual/reactive" remain high across all service types"².

Optimisation across all services remains a challenge for everyone²

Optimisation across all services remains a challenge for everyone²

Taking the data from observability platforms and combing this with cloud cost metrics allowing for data-driven decision making will be key to unlocking the platform of the future's true value. Trusting this data and driving automation will be the final key to value.

- Automate everything (well almost) and build cultural acceptance throughout your teams.

- Drive maturity in FinOps practices to realise maximum value from cloud expenditure (note, cloud is everything from on-prem to hyperscale and everything in between).

- Optimise all workloads, code, and data to drive down costs and enable wider ESG initiatives.

Private Cloud

The virtualisation wave that VMware started and continues to innovate within was a revolution to the traditional data centre operating model. The ESX revolution is over 20 years ago (can you believe that it's been that long!) and 10 years since public cloud went mainstream we are in interesting times of the Hybrid? Multi? Super? Cloud era (take your pick). Whatever term you chose to use, calling a virtual platform in its basic form (Hypervisor & Management tools) a cloud is no longer appropriate (was it ever?). Cloud as a term needs to be redefined as the operating model it really embodies, rather than the location (AWS, Azure, GCP etc) it is often related to. We now have the technologies from companies like VMware, Nutanix, Morpheus Data, and Ansible, along with many others, to deliver true cloud operating capabilities from any location of choice.

Understanding your applications and data requirements in detail should inform the correct Cloud to execute them from. If it’s an OpEx-driven, self-service capable developer-ready platform you need then a private cloud might be the most commercially attractive option. With the options to host adjacent to the public cloud providers, many options open for consideration, high-performance storage in the private cloud and elastic computing in the public cloud, not a problem! Consider some of the following if you are looking to build a private cloud:

-

- Self Service capability with integration to ITSM tooling

- Effective resource tagging to inform show/chargeback

- Highly automated to support scalability requirements

- Software defined to support elastic scale and choice over time

Public Cloud

Public cloud providers enable a critical component in the platform of the future, offering the digital enterprise something it has not had as part of previous decision processes: a platform with near-instant access to new technology without the large upfront commitment in cost, and providing an environment that can accelerate the development of new business opportunities without the traditional financial risk. Access to scale up and scale down resources without the requirement to make long-term commitments should enable organisations to capitalise on opportunities that otherwise would have posed a higher risk.

The challenge is the cloud message has been presented as the holy grail for infrastructure deployment, the answer to every workload in every scenario. This has led to many organisations making a leap into the cloud with the wrong workloads or under the wrong set of assumptions. The growing cloud reversal trend is a testament to this fact. Cloud adoption figures show continued growth, demonstrating that when adopted in the correct manner cloud services can yield significant benefits.

What is interesting is the 17.9%1 predicted growth in SaaS spending, showing that many organisations are skipping the applications modernisation step entirely as they truly exit the 'datacentre business'. When leveraged as part of a hybrid approach, public cloud features and offerings can significant value. Consider the following as part of your strategy:

-

-

- Effective cost management as part of a mature FinOps Practice

- Leveraged for the right and relevant workloads

- Operationally resilient architectures - one cloud is a risk!

- Integrated into a wider multi-cloud strategy

- Can you go straight to SaaS?

-

Cyber Resilience

The cyber threat is unavoidable and the mindset of 'when not if' has become far too widespread and accepted as the de facto outcome. I would pose that we need to reset our thinking and ensure we are truly resilient, which means we can withstand as well as recover from the inevitable attack. We cannot just keep throwing money at the problem and expect the outcome to suddenly change. Watch out for much more on the wider topic from myself and fellow Chief Technologist, Greg Van Der Gast.

Focussing on effective recovery needs a levelling up in our understanding of applications and data. For applications, we need to be able to effectively map all communication flows and dependencies. Many organisations I speak to struggle with this level of understanding, which is one reason we don’t see widespread adoption of micro-segmentation - it is difficult. This is often proven as cloud migration projects are embarked on and unexpected communication flows or data repositories use are discovered making defining new cloud security rules challenging.

For all data we need to have a deep understanding of what is stored, where it is, who has access, the sensitivity, and the content types. Without this basic set of metadata how can we define the required protection, understand the blast radius from a breach, or define retention policies?

Many of the cyber challenges facing ICT departments are either policy and procedure based or driven by a lack of visibility into data and applications. Consider the following points as a starter:

-

-

-

- Ensure you have a deep understanding of data, sensitivity, location, permissions, governance etc.

- Deploy an Isolated Recovery Environment (IRE) that supports the minimal viable company (MVC).

- Write effective Cyber Recovery Plans that are not just a copy of the DR plan.

- Investigate and remediate the root causes of cyber weakness to reduce risk over time.

-

-

Edge to Cloud Networking

The foundation of the platform is the networking interconnections. Digital businesses rely on information availability to inform critical decisions. Coworkers inside your organisation rely on a diverse range of connectivity requirements to access applications and data crucial to the execution of their jobs. Your customers and clients also rely on the applications and services you provide to conduct their business outcomes.

Without solid network foundations that stretch from the far edge devices (IoT, user endpoints) through near edge locations (retail edge and AI factories), to the Core (Private Clouds) and Cloud (Public) datacentres out to service consumers, the digital enterprise will fail.

Many modern networks are disjointed (vendors, technology compatibility, reporting, security levels), lacking the control or visibility needed to enable digital organisations. I would like to see all enterprise networks able to deliver some base capabilities.

-

-

-

-

- Source to Destination packet visibility, from edge device right through to the cloud workload.

- End to End segmentation capabilities to support cyber resilience requirements.

- Natural language-enabled support that is user and application context aware.

- Centrally programable to enable adaptability and ensure future-proof investments.

-

-

-

Summary

I believe the future is XaaS and the challenge is how to navigate the complex journey from traditional IT and application architectures to the platforms of the future. For me, XaaS should feel and operate like your light switch at home - you press, and the light comes on, simple! No menu asking if you want solar, wind, or coal power or complex approval processes, just the desired outcome as a commodity service. How far along this journey each organisation can travel is dependent on many factors and it's important we realize this is your transformation journey.

I am sure we would all be happy without Windows servers to patch and secure, without complex cloud platforms to manage and all the associated technical debt they bring. A handful of SaaS applications, some endpoint devices, and an identity provider would allow focus on core business outcomes! The challenge is most organisations have decades of legacy and traditional applications that the organisation relies on to conduct its business. It will take years to unwind all this debt and that is why we need to approach the platforms of the future with a strategic lens.

The characteristics and functions of these platforms need to be adaptable to support this journey, able to equally deliver for traditional and modern application architectures, all data storage requirements as well as operational requirements. Providing a blueprint and roadmap for this platform is my mission, thus enabling our customers to take advantage and accelerate their outcomes!

Final Thoughts

- Change your approach to cyber recovery - it is not the same as a traditional DR event!

- Ensure your platform strategy is future-proofed and supports your digital aspirations while reducing technical debt over time.

- Document your application and data strategy - it may uncover some interesting trends and opportunities.

¹https://www.gartner.com/en/newsroom/press-releases/2023-04-19-gartner-forecasts-worldwide-public-cloud-end-user-spending-to-reach-nearly-600-billion-in-2023

²https://data.finops.org/#3259