VMware, formed in 1998, started the virtualisation revolution in 2002 with the release of the first bare metal hypervisor. They revolutionised the computing space and made things that were once impossible on physical servers a reality. It is w,ithout a doubt, one of the biggest technology advancements in the last 20 years. Since then, they have gone on to revolutionise networking and storage in the same way; forging the architecture for a Software-Defined Data Centre (SDDC). For an overview of what a SDDC is and how networking is the foundation, have a read of Part 1 and Part 2 of this series.

In the next two parts, we are going to explore the next major component – Storage; looking at how VMware vSAN ushered in the Hyper-Converged Infrastructure (HCI) market.

In this part (Part 3), we are going to look at the history of vSAN and in Part 4 look at the latest advancements and future innovations.

So What is vSAN?

vSAN was released in 2014 alongside NSX, creating the foundation for the modern SDDC architecture. VMware vSAN looked to challenge the traditional storage complexities and bulldoze the silos between server and storage teams that had traditionally existed inside IT functions. With the rise of virtualisation in the 2000s the requirement for shared storage devices rocketed, and the need to manage this in lockstep with the compute (and networking, think NSX) increased. IT teams looked for ways to consolidate disparate skills that inevitably increased cost and reduced agility.

Traditional shared storage (needed to support those cool new vSphere functions like vMotion and HA) was complex. Storage arrays need RAID layouts, disk groups and spares, with items like volumes, and LUNS to design and manage - all of which require specialist skills. Storage Area Networks (SAN) running on Fibre Channel (and later iSCSI/NFS) added to the complexity and even more dedicated people with skills that a traditional server technician would not have. This complexity led to the creation of software-defined storage as part of a HCI architecture.

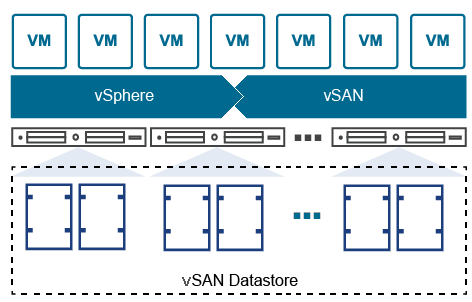

So, what is vSAN? In its simplest form, it aggregates local storage media from multiple server nodes to create a single pool that can be consumed as shared storage by a vSphere cluster. The image below shows this concept, disks local to three (or more) vSphere hosts providing a shared datastore to all running virtual machines (VM). vSAN was embedded into the ESX operating system, removing the need for additional management components, and improving performance and scale.

vSAN Concept

This architecture cut away all the complex storage decisions, the FC or iSCSI dedicated networking, and brought storage provision into the capability of the existing vSphere administrator. Able to be defined as a policy and applied in an automated fashion; vSAN changed the storage landscape forever.

vSAN History

vSAN has been around for a decade now so it is worth a quick look at some of the key dates on the journey from the days before vSAN through to the latest release of vSAN 8,

- 2011 - The real starting point for VMware and storage with the release of the vSphere Storage Appliance v 1.0.

- 2012 - The first mention of vSAN at VMworld

- 2013 - Official reveal of vSAN at VMworld

- 2014 - vSAN goes GA (6 months after VMworld)

- 2015 - vSAN 6 released alongside vSphere 6

- 2016 - vSAN 6.5

- 2017 - vSAN 6.6

- 2018 - vSAN 6.7

- 2020 - vSAN 7 released alongside vSphere 7

- 2022 - vSAN 8 released alongside vSphere 8

While vSAN was released as part of vSphere 5.5 (we skipped versions 1-4 as these were taken by vSphere Storage Appliance) it was not until v6.5 in 2016, that we started to see the real uptake and technical maturity.

vSphere 6 Era

Alongside a host of core improvements that supported easier operations, performance and stability, we got some interesting additions in version 6 of vSAN. Namely, 2-node clusters, which could support smaller offices, and the ability to provide iSCSI storage to external servers (useful for edge cases or creation of windows failover clusters).

2016, 2017 and 2018 brought some big advancements in the vSAN architecture. All Flash was added allowing for erasure coding and data reduction (more on this later) along with fault domains for rack-level resilience and disk-based encryption.

Who remembers the VMware Photon Controller? Maybe one of the first container solutions from VMware, well we got vSAN support for this in 2016 as well.

Version 6.7 in 2018 kept the innovation moving with a further host of features:

- vSphere and vSAN FIPS 140-2 validation

- Windows Server Failover Clustering support

- Intelligent site continuity for stretched clusters

- Witness traffic separation for stretched clusters

- Efficient inter-site resync for stretched clusters

- Fast failovers when using redundant vSAN networks

- Adaptive resync for dynamic management of resynchronisation traffic

Whilst the version numbers remained 6.x, this three-year period in the life of vSAN did cement the core features needed to move into the enterprise storage space. It provided the credibility and building blocks for the future.

vSAN 7 Era

2020 through 2022 was the vSphere and vSAN 7 era, VMware continued with the innovation on the core vSAN line bringing features like the below to market:

- Simplified Management - Lifecycle Manager (LCM)

- Native File Services

- Enhanced cloud-native storage

- Enhanced 2-node and stretched cluster functionality

- Operational enhancements

- Unified Cloud Analytics with Skyline Health

- Intelligent Capacity Management

- Simplified Configuration with Routed Vsan Topologies

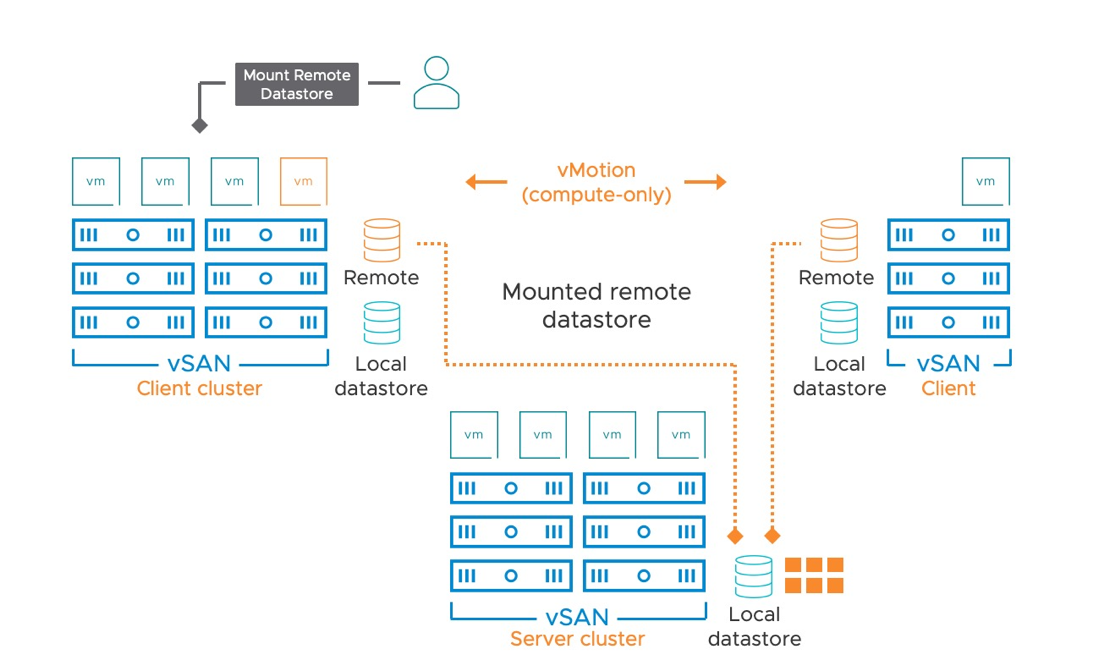

The big talking point for the vSAN 7 era was the introduction of HCI Mesh the start of the disaggregated vSAN journey (now called 'Cross-cluster capacity sharing using vSAN HCI' I know catchy!).

We will talk more about the evolution of HCI and storage in Part 4, but for now, it’s enough to know that some challenges had started to appear with the HCI concept. One of these was that capacity is locked to the specific cluster that those local disks belong to, which could easily lead to capacity being stranded in clusters and there therefore wasted. This waste, would, of course, erode the value proposition of HCI.

vSAN Mesh allowed capacity to be shared between clusters but still managed within the core constructs of vSphere and all the simplified operations that the previous years had built. You can see the concept in the image below, allowing capacity to be shared between multiple clusters. This is an important step in the life of vSAN as we look at the future of disaggregation and software-defined storage in part 4.

vSAN HCI Mesh

If you want to read more detail on HCI mesh check out this blog from VMware.

https://blogs.vmware.com/virtualblocks/2020/09/16/introducing-vmware-vsan-hci-mesh/

vSAN 8 Era

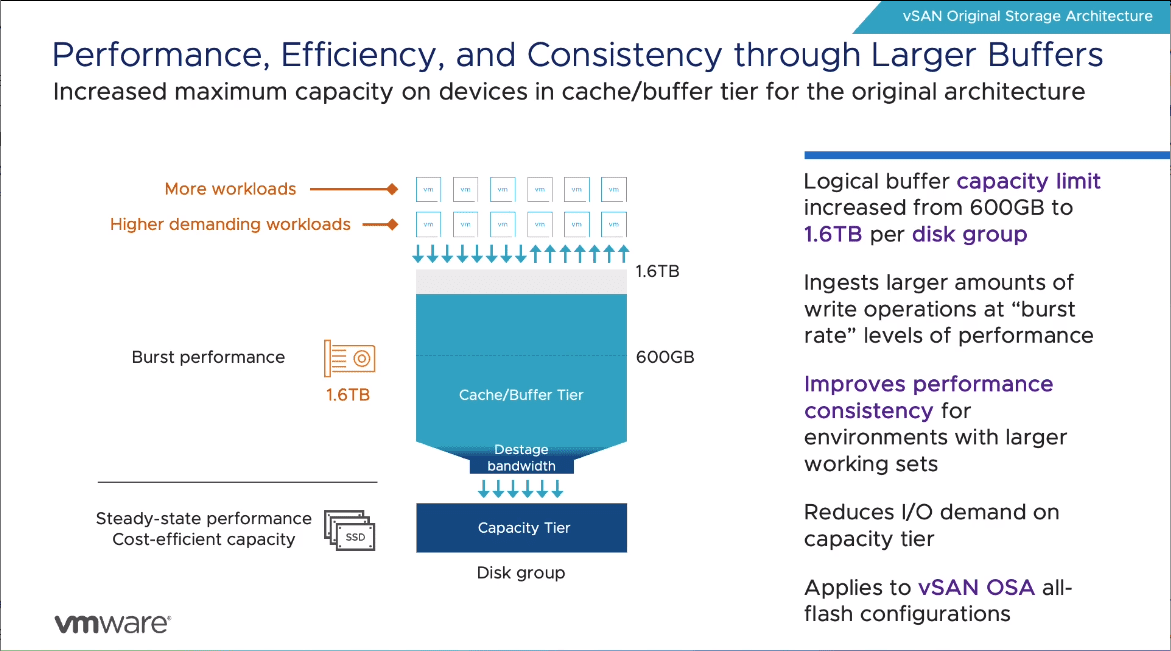

Now we are at the latest release of vSAN: version 8 update 2, released 21-09-2023. Three years after the release of vSAN 7 and nearly a decade since vSAN went GA (general availability) the world of storage had changed. Applications are demanding more performance and larger capacities, also we have significant advances in hardware capabilities like SSD, NVMe, and networking. It was time for vSAN to move into the next decade and it was becoming clear some of the core principles would not support that change. This led to one of the biggest shake-ups in the vSAN life; the Express Storage Architecture (ESA). More on that in Part 4

We did still see some advancements for the traditional architecture, like increased buffer sizes that help with larger workloads.

Before we move on to Part 4 and look at that future in more detail, I wanted to touch on the impact of Flash on vSAN

The Flash revolution

It is worth a quick stop to look at how Flash impacted the vSAN evolution, as it will be important when we look at the future direction of vSAN. All Flash rights in vSAN licences moved to standard edition in 2016, making it accessible to more customers and representing a changing tide in storage performance (wow, the flash conversation is now 7 years old!).

With the addition of All Flash vSAN, we had an architecture that could finally remove spinning media. This brought a performance increase that could allow some new outcomes in vSAN architecture, namely Erasure Coding and Data Services. It's important to remember that vSAN always used flash, but only as a cache, relying on traditional spinning disk for capacity.

Erasure coding was a key step for vSAN as it removed the reliance on RAID1/10. Whilst these RAID levels provided fantastic performance in a hybrid world, they had a significant penalty in capacity, that would not play well in an All-Flash future. In a RAID 1 (mirror) configuration, each write would consume 2x from the underlying storage, so 100GB of data required 200GB of disk, whilst RAID 5 only requires 133GB of disk for the same protection level. (I know we have not mentioned Failure to Tolerate values, one for another day)

For a deep dive on the topic of Erasure Coding check out this VMware blog:

https://blogs.vmware.com/virtualblocks/2018/06/07/the-use-of-erasure-coding-in-vsan/

The other advantage all Flash brought was the ability for deduplication and compression or 'Data Services'. Because calculating the metadata for these technologies was disk IO-intensive, it was only an option with the performance flash brought. It must be noted that compared to mainstream arrays, the limitation in disk group architecture (see ESA section for more detail), meant that deduplication was not as effective.

Combining these two features allowed the economics of all flash to be feasible, for the right workloads, compared to traditional hybrid architectures. The move from SSD to NVMe though would usher in the change in core vSAN architecture that we touched on earlier - Express Storage Architecture (ESA).

vSAN innovation pace

Hopefully this article has demonstrated the pace of innovation through the early years of vSAN, and how VMWare has started to ddress rising challenges in HCI, in order to ensure the future of the technology investment from its customers. In Part 4 we will take a look at the latest innovations and futures for vSAN.