Welcome to part 4 of the VMware Software-Defined Datacentre series, focusing on the VMware portfolio. In this part, we are continuing the vSAN discussion with a focus on the new architecture and future innovations. Please check out Part 1, Part 2 and Part 3 of this series.

Stretch Clusters!

Before we get into the new stuff, we need to discuss one elephant in the vSAN room – stretched clusters! I always find it an interesting conversation. Introduced in vSAN 6.1 around 2015, it’s a proven technology now, but I still see some common misconceptions.

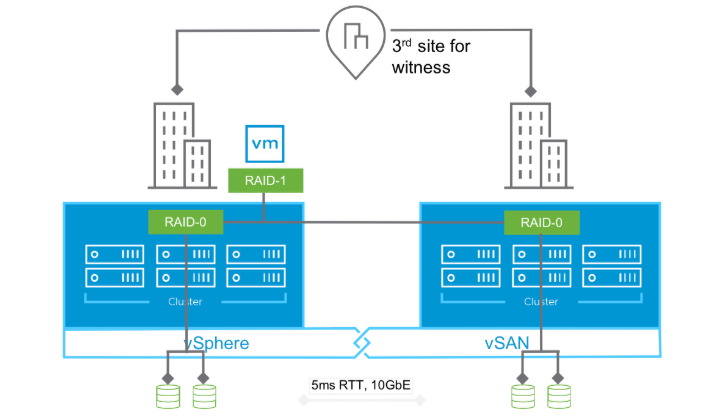

What is a stretched cluster? The concept is quite simple; a vSAN object (virtual disk for example) would have a RAID level defined for protection in its local site, and then the entire object would be striped (RAID 0) to an identical set of hardware in a remote site. This can be seen in the diagram below:

vSAN Stretched Cluster concept

As the writes in a stretched cluster are synchronous to the second site we had some network constraints and bandwidth to consider but for ultimate data availability, it is a fantastic solution. Even better in metro or campus environments in which we can control latency and bandwidth at reasonable costs!

The reason I think it's an interesting discussion is the number of stretched cluster deployments I encounter that are termed Disaster Recovery Solutions. Organisations rely 100% on sync replication and automated VMware failure to protect against all possible outage scenarios. I agree it can play a part in the overall DR strategy, but having seen what can happen when software goes wrong (taking out a full cluster), you need to have other options in that strategy. Separate backup and recovery environments are essential, as a failure in the primary site can easily be replicated in the second site and take out your entire infrastructure!

ESA evolves software-defined storage

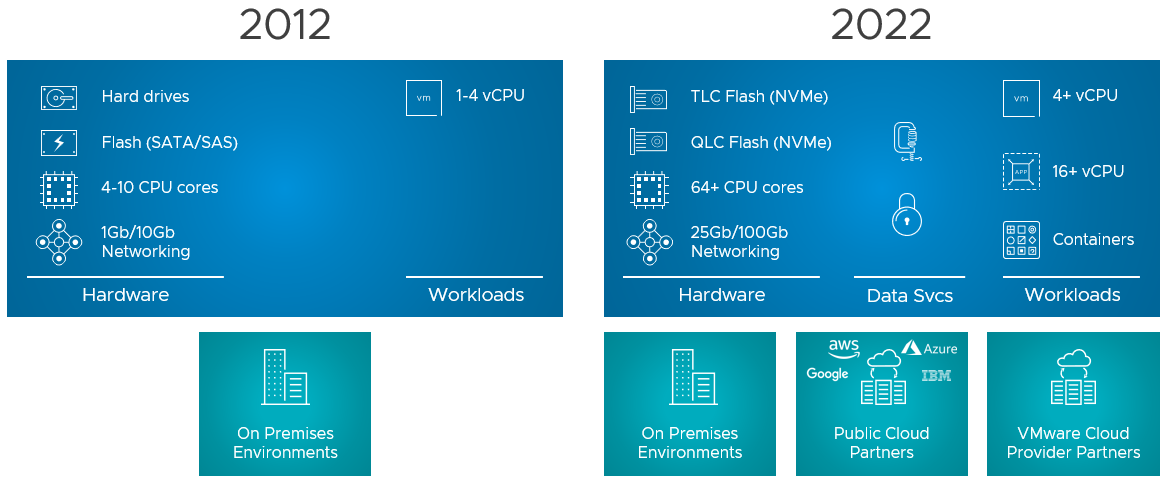

As we touched on in Part 3 of this series, VMware needed to address some emerging challenges in the vSAN architecture, which would ensure its ability to take advantage of the next generation of hardware. NVMe storage unlocks new levels of potential performance, and the current architecture was not built for such outcomes.

Now we have two architectures to consider for vSAN. OSA (Original Storage Architecture) and ESA (Express Storage Architecture) both are valid and VMware is committed to OSA for the near future, as they understand customers have existing investments. As hardware is refreshed ESA should be considered for its numerous benefits, but why was ESA needed?

Firstly, to take advantage of newer hardware but also to tackle some of the limitations in vSAN scaling and advance the HCI Mesh concepts from earlier versions of vSAN. Each of these was needed to ensure that vSAN could meet the performance, availability and capacity demands of modern application workloads. ESA is the evolution of HCI, solving the next wave of challenges as highlighted in the image below:

The evolution of HCI

The evolution of HCI

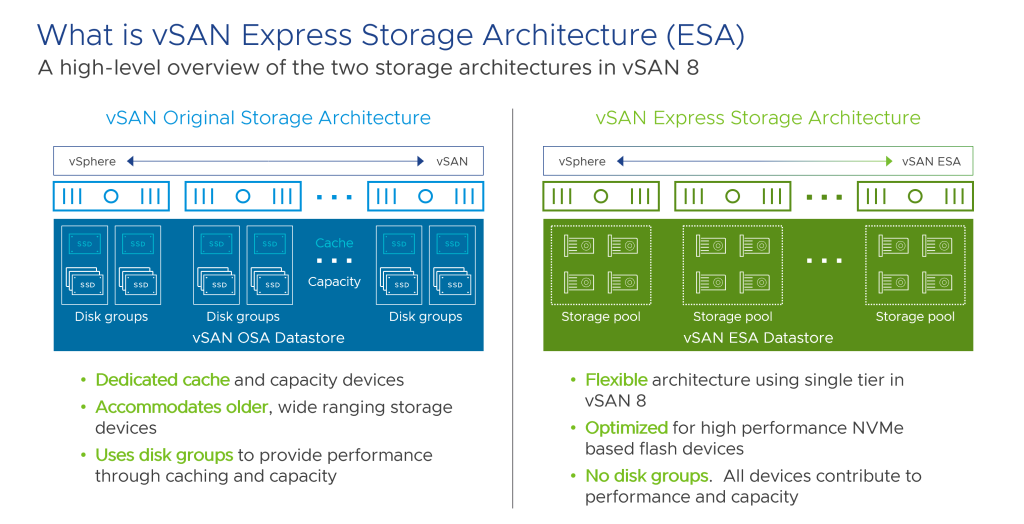

Another core concept of OSA was that of disk groups; something we hinted at earlier in the series. Looking at the image below we can see (on the left) the OSA architecture. Each physical host would have one or more disk groups that combined to form the overall storage pool. A disk group would be one cache disk (SSD) and several capacity disks (spinning or SSD). The challenge here was disk groups became the boundary for failure and data efficiency. If the cache disk failed all the capacity disks behind it would also fail! No data would be lost but it could cause large rebuild times, performance impacts, and, without proper design, reduced data protection.

Deduplication was also per disk group and not global to the cluster, decreasing potential savings as common blocks could not be identified and removed.

These two limitations would increase costs, as design changes were needed to mitigate the weaknesses. The OSA architecture was a necessity in the time of spinning disks, something that the performance of cache disk would provide, but spinning media could not deliver. As we move into an NVMe world we can look at new designs that bring greater benefits.

ESA (on the right) removes this concept of disk pools and moves to a flexible, single tier of disk in which all disks contribute to both capacity and performance. ESA allows vSAN to take full advantage of modern hardware and meet new application demands.

OSA v ESA Architectures

Along with this change in disk architecture, ESA brings some impressive features that allow vSAN to align with modern enterprise storage devices, such as:

- The space efficiency of RAID-5/6 erasure coding with the performance of RAID-1 mirroring.

- Adaptive RAID-5 erasure coding for guaranteed space savings on clusters with as few as 3 hosts.

- Storage policy-based data compression offers up to 4x better compression ratios per 4KB data block than the vSAN OSA.

- Encryption that secures data in-flight and at rest with minimal overhead.

- Adaptive network traffic shaping to ensure VM performance is maintained during resynchronisations.

- The lower total cost of ownership (TCO) by removing dedicated cache devices.

- Simplified management, and smaller failure domains by removing the construct of disk groups.

- New native scalable snapshots deliver extremely fast and consistent performance.

ESA does bring some more specific design requirements to the table, meaning it might not be an auto option. Here are a few things to consider as you plan your architecture:

- For storage, you can use NVMe devices of class D or higher for endurance and Class F or higher for performance.

- NICs with a network speed of 25Gbps is a hard requirement.

- 2 nodes and higher (Up to 64 nodes).

- vSAN ESA only supports SSDs, so no hybrid architecture with a mix of SSDs and HDDs.

- vSAN ESA supports a maximum of 24 drives per node.

In summary, vSAN ESA architecture is faster, uses less CPU and is more data-efficient. It paves the way for the next wave of disaggregation, ultimately providing customers with choice for each workload.

vSAN MAX

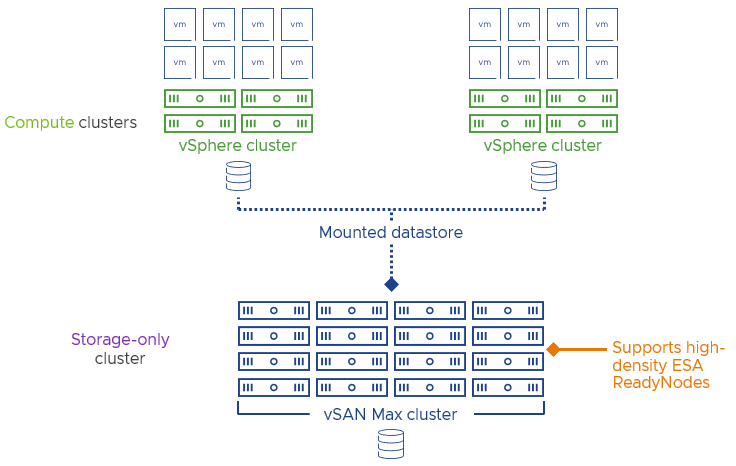

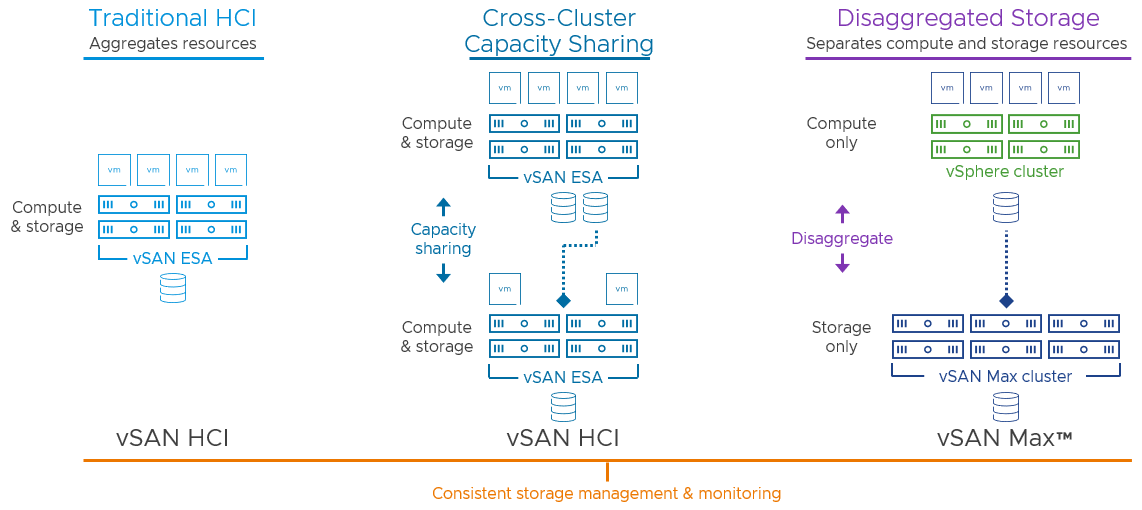

vSAN Max is a distributed scale-out storage system for ESX clusters, building on the ESA architecture, the shared resource concepts of vSAN Mesh and the concepts of disaggregation. vSAN MAX allows for the deployment of a dedicated ESA storage cluster (using cut-down licences) that can be consumed by multiple vSphere compute clusters, as shown below:

vSAN MAX Concept

This allows for building a software-defined scale-out storage cluster that can consume storage-dense nodes without having to also process compute workloads. This allows storage and compute to be scaled independently, as you would with severs and a storage array, but with all the benefits of software-defined storage. For those needing large scalable capacity to support mission-critical workloads, vSAN MAX will deliver!

Choice is king and VMware have given customers all the options to meet growing demands. Having a small branch office with traditional HCI using ESA could be the right set-up. If managing a larger site with multiple clusters, it’s possible to unlock capacity islands with cross-cluster capacity sharing (what was Mesh). Or if you are looking to design a large private cloud, which can scale for all modern data sets, consider disaggregation with vSAN MAX.

But be safe in the knowledge all will fall into the same management and monitoring workflows and embody the principles of software defined.

When looking to design a large private cloud that can scale for all modern data sets, consider disaggregation with vSAN MAX. Be safe in the knowledge all will fall into the same management and monitoring workflows and embody the principles of software-defined.

What about some of the services we noted being added to vSAN over a 4 to 5-year period like Data-at-Rest Encryption, File Services, iSCSI services, or S3-compatible object stores? vSAN Max can do that too as it is built on the ESA architecture. Even the stretched cluster topologies with vSAN Fault Domains are available to ensure maximum deployment flexibility.

The evolution from OSA, through vSAN Mesh into ESA and MAX I think shows VMware are always thinking ahead and planning to ensure vSAN will provide value to its customers.

Summary and Looking to Explore 2023

I am looking forward to getting more deep-dive detail on vSAN MAX at Explore in Barcelona and seeing what VMware do next in the world of software-defined everything. The image below really sums up the innovation over a decade and how workload needs have changed.

vSAN Evolution

Let me know what part of the SDDC you would like me to cover next. I was considering exploring the move into the public cloud with technologies like VMware Cloud on AWS and Azure VMware Solution.